CrowdStrike BSOD Outage: What We Know

What we know about the CrowdStrike BSOD outage.

We've compiled key resources to provide context and help the community answer common questions about the CrowdStrike BSOD outage:

- What happened?

- How could CrowdStrike cause a BSOD?

- Why does Microsoft allow access to the kernel?

- What was the impact of the outage?

- What are the steps for remediation?

- Phishing URLs

- Tracking Suspicious Domains

What happened?

On July 19th at 00:09 UTC-4, CrowdStrike pushed a detection update to their Falcon sensors on windows. This content update included a configuration file “Channel File” containing logic for behavioral detection. A problematic configuration file, C-00000291-00000000-00000029.sys, caused the Windows operating system to crash leading to the widely observed blue screen of death (BSOD). Microsoft estimated that 8.5 million devices were down due to the configuration file.

CrowdStrike determined that Windows machines that had Falcon sensor versions 7.11 and above that were online between July 19th, 2024 00:09 UTC-4 and July 19th, 2024 01:27 UTC-4 may have received the erroneous configuration file leading to the machine crashing.

C-00000291-00000000-00000029.sys was the channel file that system crashes. According to CrowdStrike, this particular update contains information used by the sensor to determine how to evaluate named pipe execution on Windows. Named pipes are used for interprocess communication between processes, where processes can share data with each other using a particular pipe. This behavior has been observed in several popular C2 frameworks.

How could CrowdStrike cause a BSOD?

In order to detect malicious kernel drivers and rootkits in Windows systems, CrowdStrike and other EDR agents must be developed as Early Launch Anti-Malware (ELAM) drivers, which run in kernel mode and before other startup drivers are initialized.

The CrowdStrike agent loaded the faulty configuration file during the Windows bootup process, which resulted in an invalid memory access attempt in kernel mode. Whereas user-mode crashes will have limited impact to the underlying operating system, unhandled errors in kernel mode result in a system failure to prevent possible corruption of data, appearing to users as the BSOD. The subsequent bootloop was caused by the CrowdStrike agent attempting to reload the channel file as the system restarts.

Why does Microsoft allow access to the kernel?

Where Apple has restricted third-party Mac OS software running at the kernel level, Microsoft is required to provide security vendors access to the kernel as a result of an antitrust complaint from the European Commission in 2009. Microsoft introduced Kernel Patch Protection in 2005 to prevent kernel “patching” by third-party security software, but made exceptions for Microsoft security software, resulting in criticism from antivirus vendors.

What was the impact of the outage?

Major organizations around the world within the transportation, financial services and healthcare sectors have reported disruptions starting from July 19th. The widespread impact reflects CrowdStrike popularity as the EDR of choice for many organizations with over half of the Fortune 500 companies using it (Reuters). Upguard has shared a list of organizations that were impacted by the CrowdStrike incident.

Due to the widespread operational and financial impact, US House leaders have called on CrowdStrike CEO George Kurtz to testify before Congress (AP News).

With regards to cyber insurance coverage, Fitch Ratings says it expects insurance companies to avoid major financial impact as a result of the outage. Loretta Worters from the Insurance Information Institute said that cyber insurance does not typically cover downtime due to non-malicious events at service providers (Reuters).

What are the steps for remediation?

CrowdStrike shared steps for regaining access to impacted devices that have been documented by Qualys. These steps are a mixture of reverting to prior screenshots where available or accessing the machine and removing the problematic file.

Recovery Steps for Windows Machines

1. Boot Windows into Safe Mode or the Windows Recovery Environment.

2. Navigate to the C:\Windows\System32\drivers\CrowdStrike directory.

3. Locate and delete the file matching “C-00000291*.sys”.

4. Boot the host normally.

Recovery Steps for Machines on AWS

1. Detach the EBS volume from the impacted EC2 instance.

2. Attach the EBS volume to a new EC2 instance.

3. Fix the CrowdStrike driver folder.

4. Detach the EBS volume from the new EC2 instance.

5. Attach the EBS volume back to the impacted EC2 instance.

Recovery Steps for Machines on Azure

1. Log in to the Azure console.

2. Go to Virtual Machines and select the affected VM.

3. In the upper left of the console, click “Connect”.

4. Click “More ways to Connect” and then select “Serial Console”.

5. Once SAC has loaded, type in ‘cmd’ and press Enter.

6. Type ‘ch -si 1’ and press the space bar.

7. Enter Administrator credentials.

8. Type the following commands:

9. ‘bcdedit /set {current} safeboot minimal’

10. ‘bcdedit /set {current} safeboot network’

11. Restart the VM.

12. To confirm the boot state, run the command: ‘wmic COMPUTERSYSTEM GET BootupState’.One of the major hurdles impacting recovery is the manual effort required to regain access to affected devices. For devices that are encrypted with Bitlocker, the recovery kit will be needed to recover the device. Microsoft has also released a recovery tool to help users recover impacted devices.

Phishing URLs

While CrowdStrike worked to identify what caused systems to crash and impacted organizations attempted to continue business operations where possible, others were quick to exploit the outage for their own gain. Researchers identified several domains that had been registered related to the outage (Twitter Post).

Some sites provided instructions on how to recover a system that matches those provided by CrowdStrike, but also provided contact information to impacted users to reach out.

Another site also contained donations links through PayPal and bitcoin.

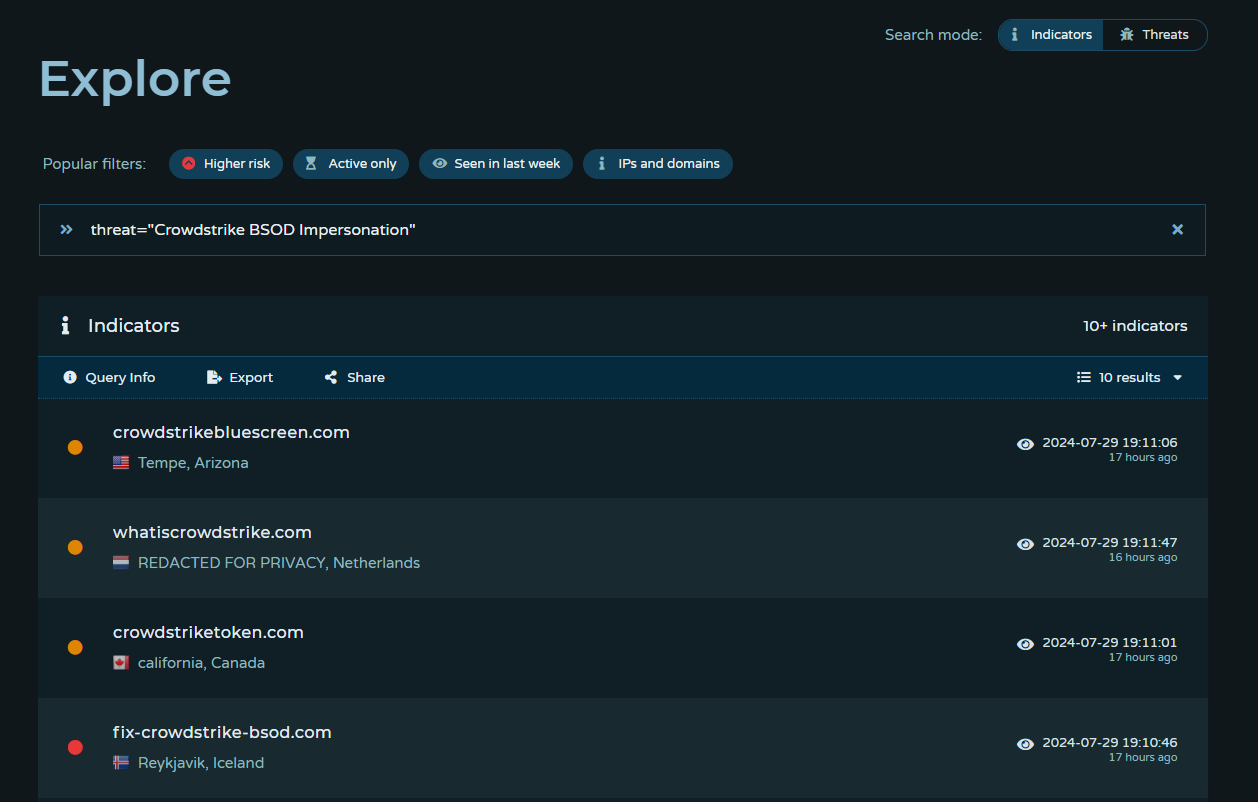

Tracking Suspicious Domains

Potentially lookalike and suspicious domains related to the CrowdStrike Outage have been identified, aggregated, and added to the Pulsedive platform. This data can be queried in Pulsedive using the Explore query threat="CrowdStrike BSOD Impersonation" and is available for export in multiple formats (CSV, STIX 2.1, JSON).

References

- https://blogs.microsoft.com/blog/2024/07/20/helping-our-customers-through-the-crowdstrike-outage/

- https://www.crowdstrike.com/falcon-content-update-remediation-and-guidance-hub/

- https://www.crowdstrike.com/blog/falcon-update-for-windows-hosts-technical-details/

- https://news.delta.com/update-delta-customers-ceo-ed-bastian

- https://blog.qualys.com/vulnerabilities-threat-research/2024/07/19/global-outage-alert-windows-bsod-crisis-following-crowdstrike-update-recovery-steps-qualys-assurance

- https://www.upguard.com/crowdstrike-outage

- https://www.reuters.com/technology/cybersecurity/crowdstrike-update-that-caused-global-outage-likely-skipped-checks-experts-say-2024-07-20/

- https://x.com/JCyberSec_/status/1814287979453497410